Differential privacy (DP) is a property of randomized mechanisms that restrict the affect of any particular person person’s data whereas processing and analyzing knowledge. DP affords a sturdy resolution to deal with rising considerations about knowledge safety, enabling applied sciences across industries and authorities purposes (e.g., the US census) with out compromising particular person person identities. As its adoption will increase, it’s vital to establish the potential dangers of growing mechanisms with defective implementations. Researchers have just lately discovered errors within the mathematical proofs of personal mechanisms, and their implementations. For instance, researchers compared six sparse vector method (SVT) variations and located that solely two of the six really met the asserted privateness assure. Even when mathematical proofs are appropriate, the code implementing the mechanism is weak to human error.

Nevertheless, sensible and environment friendly DP auditing is difficult primarily as a result of inherent randomness of the mechanisms and the probabilistic nature of the examined ensures. As well as, a variety of assure sorts exist, (e.g., pure DP, approximate DP, Rényi DP, and concentrated DP), and this variety contributes to the complexity of formulating the auditing drawback. Additional, debugging mathematical proofs and code bases is an intractable activity given the amount of proposed mechanisms. Whereas advert hoc testing methods exist below particular assumptions of mechanisms, few efforts have been made to develop an extensible software for testing DP mechanisms.

To that finish, in “DP-Auditorium: A Large Scale Library for Auditing Differential Privacy”, we introduce an open source library for auditing DP ensures with solely black-box entry to a mechanism (i.e., with none information of the mechanism’s inner properties). DP-Auditorium is carried out in Python and supplies a versatile interface that enables contributions to constantly enhance its testing capabilities. We additionally introduce new testing algorithms that carry out divergence optimization over perform areas for Rényi DP, pure DP, and approximate DP. We reveal that DP-Auditorium can effectively establish DP assure violations, and counsel which exams are best suited for detecting explicit bugs below varied privateness ensures.

DP ensures

The output of a DP mechanism is a pattern drawn from a likelihood distribution (M (D)) that satisfies a mathematical property guaranteeing the privateness of person knowledge. A DP assure is thus tightly associated to properties between pairs of likelihood distributions. A mechanism is differentially non-public if the likelihood distributions decided by M on dataset D and a neighboring dataset D’, which differ by just one file, are indistinguishable below a given divergence metric.

For instance, the classical approximate DP definition states {that a} mechanism is roughly DP with parameters (ε, δ) if the hockey-stick divergence of order eε, between M(D) and M(D’), is at most δ. Pure DP is a particular occasion of approximate DP the place δ = 0. Lastly, a mechanism is taken into account Rényi DP with parameters (𝛼, ε) if the Rényi divergence of order 𝛼, is at most ε (the place ε is a small constructive worth). In these three definitions, ε shouldn’t be interchangeable however intuitively conveys the identical idea; bigger values of ε suggest bigger divergences between the 2 distributions or much less privateness, for the reason that two distributions are simpler to differentiate.

DP-Auditorium

DP-Auditorium includes two foremost parts: property testers and dataset finders. Property testers take samples from a mechanism evaluated on particular datasets as enter and purpose to establish privateness assure violations within the supplied datasets. Dataset finders counsel datasets the place the privateness assure could fail. By combining each parts, DP-Auditorium allows (1) automated testing of numerous mechanisms and privateness definitions and, (2) detection of bugs in privacy-preserving mechanisms. We implement varied non-public and non-private mechanisms, together with easy mechanisms that compute the imply of data and extra complicated mechanisms, similar to totally different SVT and gradient descent mechanism variants.

Property testers decide if proof exists to reject the speculation {that a} given divergence between two likelihood distributions, P and Q, is bounded by a prespecified price range decided by the DP assure being examined. They compute a decrease sure from samples from P and Q, rejecting the property if the decrease sure worth exceeds the anticipated divergence. No ensures are supplied if the result’s certainly bounded. To check for a variety of privateness ensures, DP-Auditorium introduces three novel testers: (1) HockeyStickPropertyTester, (2) RényiPropertyTester, and (3) MMDPropertyTester. Not like different approaches, these testers don’t rely upon express histogram approximations of the examined distributions. They depend on variational representations of the hockey-stick divergence, Rényi divergence, and maximum mean discrepancy (MMD) that allow the estimation of divergences by means of optimization over perform areas. As a baseline, we implement HistogramPropertyTester, a generally used approximate DP tester. Whereas our three testers comply with an identical strategy, for brevity, we concentrate on the HockeyStickPropertyTester on this put up.

Given two neighboring datasets, D and D’, the HockeyStickPropertyTester finds a decrease sure,^δ for the hockey-stick divergence between M(D) and M(D’) that holds with excessive likelihood. Hockey-stick divergence enforces that the 2 distributions M(D) and M(D’) are shut below an approximate DP assure. Subsequently, if a privateness assure claims that the hockey-stick divergence is at most δ, and^δ > δ, then with excessive likelihood the divergence is increased than what was promised on D and D’ and the mechanism can’t fulfill the given approximate DP assure. The decrease sure^δ is computed as an empirical and tractable counterpart of a variational formulation of the hockey-stick divergence (see the paper for extra particulars). The accuracy of^δ will increase with the variety of samples drawn from the mechanism, however decreases because the variational formulation is simplified. We stability these elements with a view to be sure that^δ is each correct and straightforward to compute.

Dataset finders use black-box optimization to search out datasets D and D’ that maximize^δ, a decrease sure on the divergence worth δ. Observe that black-box optimization methods are particularly designed for settings the place deriving gradients for an goal perform could also be impractical and even unattainable. These optimization methods oscillate between exploration and exploitation phases to estimate the form of the target perform and predict areas the place the target can have optimum values. In distinction, a full exploration algorithm, such because the grid search method, searches over the total area of neighboring datasets D and D’. DP-Auditorium implements totally different dataset finders by means of the open sourced black-box optimization library Vizier.

Working current parts on a brand new mechanism solely requires defining the mechanism as a Python perform that takes an array of information D and a desired variety of samples n to be output by the mechanism computed on D. As well as, we offer versatile wrappers for testers and dataset finders that permit practitioners to implement their very own testing and dataset search algorithms.

Key outcomes

We assess the effectiveness of DP-Auditorium on 5 non-public and 9 non-private mechanisms with numerous output areas. For every property tester, we repeat the check ten instances on fastened datasets utilizing totally different values of ε, and report the variety of instances every tester identifies privateness bugs. Whereas no tester persistently outperforms the others, we establish bugs that might be missed by earlier methods (HistogramPropertyTester). Observe that the HistogramPropertyTester shouldn’t be relevant to SVT mechanisms.

|

| Variety of instances every property tester finds the privateness violation for the examined non-private mechanisms. NonDPLaplaceMean and NonDPGaussianMean mechanisms are defective implementations of the Laplace and Gaussian mechanisms for computing the imply. |

We additionally analyze the implementation of a DP gradient descent algorithm (DP-GD) in TensorFlow that computes gradients of the loss perform on non-public knowledge. To protect privateness, DP-GD employs a clipping mechanism to sure the l2-norm of the gradients by a price G, adopted by the addition of Gaussian noise. This implementation incorrectly assumes that the noise added has a scale of G, whereas in actuality, the size is sG, the place s is a constructive scalar. This discrepancy results in an approximate DP assure that holds just for values of s higher than or equal to 1.

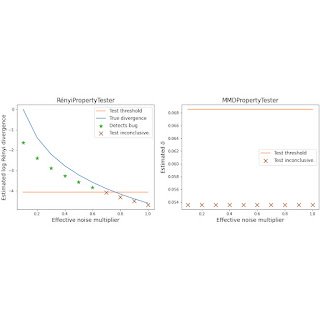

We consider the effectiveness of property testers in detecting this bug and present that HockeyStickPropertyTester and RényiPropertyTester exhibit superior efficiency in figuring out privateness violations, outperforming MMDPropertyTester and HistogramPropertyTester. Notably, these testers detect the bug even for values of s as excessive as 0.6. It’s value highlighting that s = 0.5 corresponds to a common error in literature that includes lacking an element of two when accounting for the privateness price range ε. DP-Auditorium efficiently captures this bug as proven beneath. For extra particulars see part 5.6 here.

|

| Estimated divergences and check thresholds for various values of s when testing DP-GD with the HistogramPropertyTester (left) and the HockeyStickPropertyTester (proper). |

|

| Estimated divergences and check thresholds for various values of s when testing DP-GD with the RényiPropertyTester (left) and the MMDPropertyTester (proper) |

To check dataset finders, we compute the variety of datasets explored earlier than discovering a privateness violation. On common, nearly all of bugs are found in lower than 10 calls to dataset finders. Randomized and exploration/exploitation strategies are extra environment friendly at discovering datasets than grid search. For extra particulars, see the paper.

Conclusion

DP is likely one of the strongest frameworks for knowledge safety. Nevertheless, correct implementation of DP mechanisms could be difficult and vulnerable to errors that can’t be simply detected utilizing conventional unit testing strategies. A unified testing framework might help auditors, regulators, and lecturers be sure that non-public mechanisms are certainly non-public.

DP-Auditorium is a brand new strategy to testing DP by way of divergence optimization over perform areas. Our outcomes present that any such function-based estimation persistently outperforms earlier black-box entry testers. Lastly, we reveal that these function-based estimators permit for a greater discovery price of privateness bugs in comparison with histogram estimation. By open sourcing DP-Auditorium, we purpose to determine a typical for end-to-end testing of recent differentially non-public algorithms.

Acknowledgements

The work described right here was finished collectively with Andrés Muñoz Medina, William Kong and Umar Syed. We thank Chris Dibak and Vadym Doroshenko for useful engineering help and interface options for our library.