This article is about the strange science of spotting when a machine is pretending to be a human. So, Can You Spot the Bot ? In an internet brimming with AI-generated essays, fake reviews, news articles, and even poems, knowing how to tell the difference is gaining importance. Did you know that AI-written content is now so convincing that it once passed a US medical licensing exam and then made up fake studies to justify its answers. Sounds unreal, but it’s true. In 2023, GPT-4 outperformed medical students on the U.S. licensing exam and then backed up its answers with research papers that appeared legitimate, but didn’t exist, as reported in an article published in the National Library of Medicine, U.S. Government.

In the following sections, we’ll explore how AI-generated text works, why detecting it is so hard, who’s affected the most, and how you can train your eye to spot it, with no special tools required.

We live in a time when words no longer need a writer. From academic essays and news articles to job applications and even love letters, artificial intelligence can now generate text so convincingly human-like that many readers can’t tell the difference. But beneath that literary prose lies a statistical machine, one that doesn’t think, feel, or understand, but predicts! Whether you’re a professor trying to verify a student’s assignment submission, a research scholar filtering sources, or a curious reader wondering if what you’re reading is human, understanding how AI leaves behind digital fingerprints is key.

AI-generated text is the output produced by a computer model, a set of algorithms, in response to a user-provided prompt. A prompt can be considered as a hint text for the computer model to aid in predicting the next sequence of required text. This generation can be generalised for large language models (LLMs) like ChatGPT, Claude, Gemini, or Mistral, etc, that generate text from any prompt in the following manner :

Breaking down words

First, the input sentence or paragraph is broken into tiny pieces called tokens. Think of these as individual words, parts of words (like “on-” or “-ing”), or even punctuation marks.

Turning words into numbers

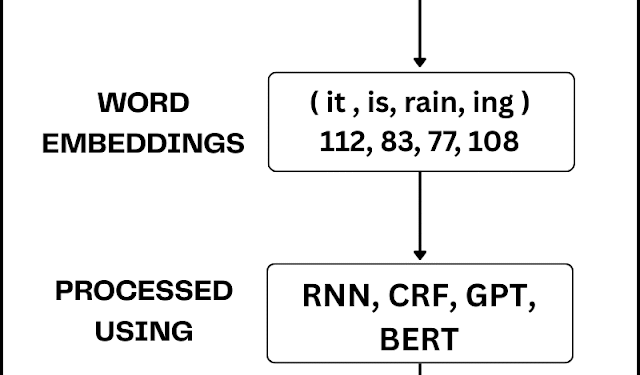

Next, each of these tokens is turned into a set of numbers, using something called word embeddings. For an analogy, it is almost like assigning coordinates to each token.

The thinking machine

These number-addresses are then fed into a dense deep learning model. This is like the AI’s “brain,” made up of many layers of interconnected “neurons.”

There are 3 widely implemented ways of creating this brain, according to the research conducted at IBM:

1. Statistical models (N-gram models and conditional random fields (CRF)),

2. Neural networks (Recurrent neural networks (RNNs) and Long short-term memory networks (LSTMs)),

3. Transformer-based models (Generative Pretrained Transformer (GPT) and Bidirectional Encoder Representations from Transformers (BERT)).

Using one of these, the AI figures out which words in the input sentence are most important and how they relate to each other. For example, if the given input is “The bottle is kept on the table. It is pink,” the AI uses self-attention to understand that “it” is referring to “the bottle.”

Predicting the Next Word

For prediction, the AI uses mathematics, mainly matrix multiplication and statistics, to figure out what token should come next. It calculates how likely each possible word/token is to appear. One major function called softmax is highly used for this purpose.

AI text detection is important for maintaining authenticity and trust, especially in academia and online media. It is on the root level, identification of AI-generated content, to ensure that work is original and not plagiarised. This is crucial for combating misinformation as well as protecting intellectual property. The detection is done using AI detectors (also called AI writing detectors or AI content detectors), which are tools designed to detect when a text was partially or entirely generated by artificial intelligence (AI) tools such as ChatGPT, Claude, and Gemini. For example, for professors who want to check if their students are doing their academic assignments with honesty or for social media moderators trying to remove fake product reviews and other spam content.

This detection algorithm can be reverse-engineered from the generation algorithm as:

Training the Detector:

Researchers collect large datasets of diversely written text by humans and text generated by language models like GPT, Claude, Gemini, etc. This data is then fed into a new model (the detector), which learns to spot the subtle differences between the two.

How does the detector work ?

The detector model studies the pattern of AI writing and learns to associate these traits with machine-generated text.

- AI tends to use balanced sentence structures.

- Avoids ambiguity, thus often over-explains.

- It uses filler phrases (“In today’s world..”, “It is important to note..”).

- It’s unusually consistent and lacks emotional depth or the nuances of real life.

Use of probability and perplexity:

Just like a language model generates words by calculating which one is most likely to come next, the detector runs that same mathematics but in reverse. If the words in a sentence are very predictable (low perplexity), it could mean an AI wrote it. Human writing tends to be more varied and surprising with higher perplexity (more unpredictability).

Entropy and burstiness checks:

As in physics, entropy is the measure of randomness. Burstiness refers to natural variation in sentence length and structure. AI writing is often too smooth (low burstiness, low entropy) with consistent sentence lengths, no sudden emotional spikes, well well-organised thoughts. Detector models flag that as suspicious.

Final Judgement:

The detection model (often based on something like RoBERTa, a transformer model) looks at all these features and gives a score, for example: “This text is 79% likely to be AI-generated.”

The traditional AI detection tools, as illustrated above, peer at writing style and patterns, but the researchers say these do not work well anymore because AI has improved at sounding like a real person. As models like OpenAI’s GPT-4, Anthropic’s Claude, and Google’s Gemini blurred the line between machine and human authorship, a team of researchers are developing a new mathematical framework to implement, test and improve the “watermarking” methods used to spot machine-made text. Watermarking is a technique that embeds subtle, unique identifiers (watermarks) into AI-generated content, such as text, images, or audio, to identify its source and prevent misuse. These watermarks are designed to be detectable by algorithms and are often invisible to the human eye.

AI-generated content like text, images, videos, and audio can nowadays be seen everywhere, of which the most trusted and foolproof is AI-generated text. Text generation is a versatile tool that has a wide range of applications in various domains. It’s automating daily tasks across marketing, journalism, support, education, and even creative writing! While this enables faster content generation and personalised user experiences, this very convenience brings a challenge: How can we tell what’s been written by a human, and what’s not? As machine-written text blends into the background of everyday writing, detection becomes more than a technical curiosity; it’s a necessity for accountability, honesty and trust. Following are key sectors where AI-generated text is flooding and where detection has become critical:

- Blogging & Articles

Why it matters: When reading an article or blog post, we expect it to be a real person sharing their genuine thoughts and experiences. This is especially important when what you’re reading could influence your purchasing decisions or shape your views on important topics about the use of AI or human rights. - News & Media

AI is used to draft news reports from data (Eg, science, finance), but it can also make mistakes or invent “facts” (hallucinate). In the news, this undermines trust. AI detection ensures accuracy and helps news channels and outlets stay accountable, protecting integrity. - Social Media

In May 2024, Reuters reported that roughly 15% of Twitter (now X) accounts promoting political messaging in favour of former President Trump were suspected to be fake automated accounts, identified using AI-powered detection tools. In such scenarios, it is crucial to check the legitimacy of content. - E-commerce & Product Descriptions

Many product reviews are now AI-written, and some are fake to increase the customer base. Detection tools help platforms identify and remove inauthentic content, protecting consumer trust. - Language Translation & Chatbots

When people chat with virtual assistants or use translation tools, it’s important to know if they’re talking to a real person or a computer. Detecting when a response is AI-generated helps make sure real humans can step in when needed, especially if the question or situation is sensitive or complex. - Education & Storytelling

AI can easily generate educational narratives, summaries, and study guides. It boosts accessibility, but edges out teachers and educational content creators.

And this is just the beginning, according to Europol’s Innovation Lab report, “as much as 90 % of online content may be synthetically generated by the end of the year 2026”.

Other analysts share similar thoughts and reflections, warning that generative models may soon dominate our digital information portraits, raising urgent concerns around authenticity, trust, and the need for robust detection systems.

So, can You Spot the Bot ? -Yes!

Without advanced detection tools, there are ways to start identifying AI-written content using logic, observation, and curiosity. Think of it as your personal “first aid kit” for digital literacy in this AI day and age. The following illustration is to provide an aerial view comparison of text generated by large language models and that written by a human brain.

Description : This comparison demonstrates how essays generated by AI differ from those written by students. The LLM essay is coherent and formal, whereas the student essay shows spelling mistakes and informal expressions.

The First Aid Kit

There are Super-6 techniques one can implement to scan for any machine-written text before using the AI detectors.

- Test your Gut :

Read a paragraph and ask :

• Is it feeling emotionally bland or over-generalised ?

• Is it sounding repetitive ? Oddly perfect in grammar, but lacks nuances ? - Observe Pattern Loops :

AI often repeats sentence structures or phrases multiple times. Look out for “AI content” labels recently added to generated content by YouTube, Meta, and X (Twitter). - Look for Vagueness or Hallucinations :

AI tends to be confidently wrong. Try fact-checking any claims, stats, or references it provides. If a source sounds impressive but doesn’t exist, it’s likely made up. Exactly as in the 2023 medical licensing exam incident. - Spot Missing Context or Personal Insight:

AI often lacks lived experiences. Human writers usually bring emotions, cultural context, or personal stories — something AI-generated content tends to gloss over. - Practice Online Challenges:

Play “AI or Not?” games like:

• GPTZero’s “Human vs AI” tester

• Google’s “Fact Check Explorer”

• Quiz-style platforms like “DetectGPT” (educational versions) - Reverse Search:

Paste suspicious phrases into a search engine. If similar content appears elsewhere with minor tweaks, it might have been AI-generated or templated.

This basic awareness can go a long way. By training oneself to question style, structure, and facts, one can often catch AI-written content before it fools.

AI and the Law

Intellectual Property Rights (IPR) in the age of AI-generated content pose a growing legal grey area. If a machine generates a poem, painting, or research summary, who owns it, the user, the developer, or no one at all ? Traditional IPR laws were built around human creativity, making it unclear how to assign ownership to works produced by algorithms. While AI-generated content typically does not constitute plagiarism in the traditional sense, since it doesn’t copy from an identifiable human author, it can still be considered academic dishonesty. As these text and media flood our feeds, global policymakers and lawmakers are drafting new rules to ensure transparency, accountability, and ethical use in an increasingly synthetic world. Two of these are:

EU’s AI Act (2024) mandates transparency : any AI-generated or manipulated content like text, images, audio, or video must be clearly labelled. Providers interacting with humans must disclose AI use; obligations would start taking effect from August 2, 2026 according to the European Union’s policy framework.

India’s MeitY advisories (2023–2024) also instruct platforms to label AI-generated content, including text, images, and video, and develop watermarking and authentication check tools as part of its IndiaAI Mission initiatives, according to the Artificial India Committee reports.

Bottom line: As generative AI continues to blur the lines between human and machine authorship, detection isn’t just a technical challenge; it’s a societal responsibility. From sharpening our radar to enforcing policy guards, a balanced blend of awareness, tools, and regulation will be crucial in navigating this new digital world.