Imagine Googling “how to fix a leaky faucet” and getting results like “how to repair a dripping tap” instead of just articles containing the exact words “leaky faucet.” That’s the power of semantic search.

In this post, we’ll explore what semantic search is, how it differs from traditional keyword search, and how modern AI models like BERT, Siamese networks, and sentence transformers are making search systems smarter. We’ll also walk through a practical example using Python to implement semantic search using embeddings.

Semantic search refers to the process of retrieving information based on its meaning rather than merely matching keywords.

Traditional search engines rely on keyword frequency and placement. In contrast, semantic search understands the intent and context behind your query.

For example:

Query: “What’s the capital of India?”

Keyword Search Result: Pages with “capital” and “India” appearing together.

Semantic Search Result: “New Delhi” — even if the phrase “capital of India” isn’t used directly.

Traditional search engines often fail when:

- Synonyms are used (e.g., “car” vs. “automobile”)

- Questions are asked in a conversational tone

- Context is important to disambiguate meaning (e.g., “python” the snake vs. “Python” the language)

Semantic search addresses these challenges by leveraging Natural Language Understanding (NLU).

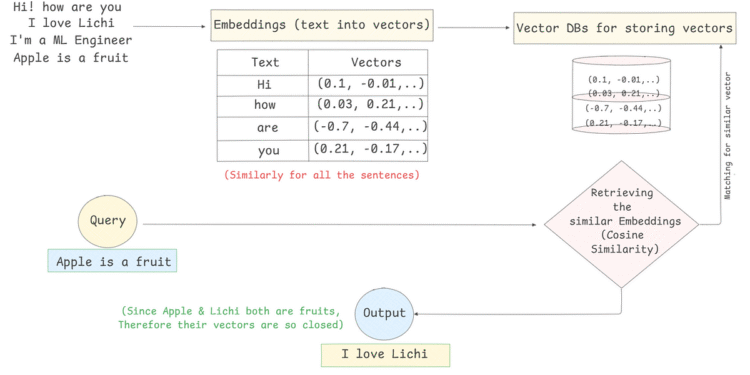

Under the hood, semantic search typically involves three steps:

- Convert Queries and Documents to Vectors using language models.

- Store document embeddings in a vector database or index.

- Find the Most Similar Embeddings to the query using similarity metrics (like cosine similarity).

1. From Text to Vectors: Embeddings

Using models like BERT, RoBERTa, or sentence-transformers, sentences are converted into high-dimensional vectors.

Example:

- “How to fix a leaking tap?” →

[0.23, -0.47, ..., 0.19](768-dim vector)

These embeddings capture semantic properties of the text. Semantically similar texts lie closer in this vector space.

2. Storing the vectors in VectorDBs

Pinecone, Weaviate, Qdrant, etc., are some vector databases to store the embeddings and can be fetched easily whenever required.

3. Retrieving the similar Embeddings

To compare how similar two texts are, we calculate the distance or angle between their vectors. Common similarity/distance metrics include:

a. Cosine Similarity (Most Common for NLP)

What it measures:

The angle between two vectors (i.e., how similar their directions are).

Formula:

Cosine Similarity = (A • B) / (||A|| * ||B||)

where:

- A • B is the dot product of vectors A and B

- ||A|| is the magnitude (length) of vector A

- ||B|| is the magnitude of vector B

Range: (-1 to 1):

- 1 → Vectors point in the same direction (very similar)

- 0 → Vectors are orthogonal (unrelated)

- -1 → Vectors point in opposite directions (rare in practice with text embeddings)

Why it’s useful:

Cosine similarity ignores magnitude and focuses on direction, which makes it perfect for comparing sentence or word embeddings.

b. Euclidean Distance

What it measures:

The straight-line distance between two vectors in space.

Formula:

Euclidean Distance = Square root of the sum of squared differences across all dimensions

= sqrt( (A1 — B1)² + (A2 — B2)² + … + (An — Bn)² )

Interpretation:

- Lower distance = more similar

- Higher distance = more different

Why it’s used less in NLP:

It’s sensitive to vector magnitude and not ideal when you’re only interested in direction or semantic closeness.

c. Manhattan Distance (Also called L1 distance)

What it measures:

The sum of absolute differences across all dimensions.

Formula:

Manhattan Distance = |A1 — B1| + |A2 — B2| + … + |An — Bn|

Use case:

Useful in high-dimensional or sparse data scenarios. Not very common in dense text embeddings.

- BERT & Sentence-BERT: Pretrained models that generate contextual embeddings.

- FAISS: Facebook’s library for efficient similarity search.

- Pinecone, Weaviate, Qdrant: Vector databases.

- Hugging Face Transformers: For generating embeddings from models.

# To install dependencies

pip install sentence-transformers#Sample Documents

docs = [

"How to learn Python programming?",

"Best ways to stay healthy during winter",

"Tips for fixing a leaky faucet",

"Introduction to machine learning",

"How to repair a dripping tap"

]

# Create Embeddings

from sentence_transformers import SentenceTransformer, util

model = SentenceTransformer('all-MiniLM-L6-v2')

doc_embeddings = model.encode(docs, convert_to_tensor=True)

# Query & Search

query = "How can I fix a leaking tap?"

query_embedding = model.encode(query, convert_to_tensor=True)

# Find the most similar document

scores = util.pytorch_cos_sim(query_embedding, doc_embeddings)

# Rank documents by score

best_match_index = scores.argmax()

print(f"Best match: {docs[best_match_index]}")

#This Must be the output

" Best match: How to repair a dripping tap "

Visual Representation of the above code snippet:

- E-commerce: “Affordable laptop for students” → Results with low-cost notebooks

- Customer Support: Match tickets to relevant knowledge base articles

- Recruitment Platforms: Match resumes to job descriptions

- Chatbots: Retrieve context-aware answers from documentation

Semantic search isn’t just a buzzword — it’s transforming the way we find information. Whether you’re building a smart search feature in your app or optimizing your content for voice search, understanding semantics is now essential.

As language models grow more powerful, the line between “search” and “understanding” continues to blur. And that’s exactly what makes this space so exciting.