Agentic AI design patterns enhance the autonomy of large language models (LLMs) like Llama, Claude, or GPT by leveraging tool-use, decision-making, and problem-solving. This brings a structured approach for creating and managing autonomous agents in several use cases.

What are agentic workflows?

An agent is considered more intelligent if it consistently chooses actions that lead to outcomes more closely aligned with its objective function.

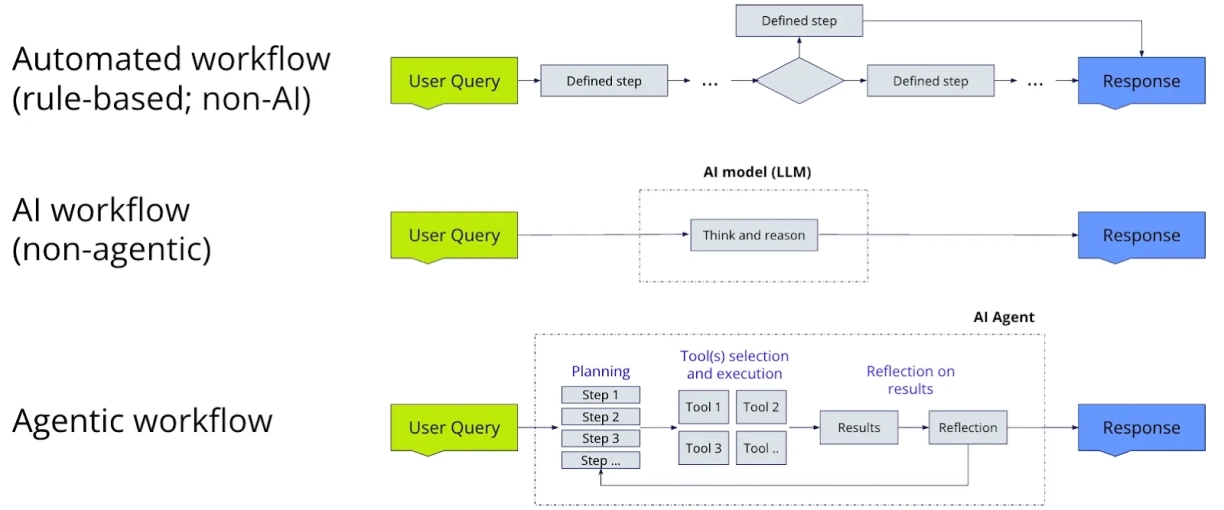

Automated workflows (rule-based, non-Al)

Follow predefined rules and processes, typically based on fixed instructions. They are designed to handle repetitive tasks efficiently, often through systems like robotic process automation (RPA), where little to no decision-making is required.

AI workflows (non-agentic)

Systems where LLMs and tools are orchestrated through predefined code paths, with minimal thinking involved. In a non-agentic workflow, an LLM generates an output from a prompt, like generating a list of recommendations based on input.

Agentic workflows

AI-driven processes where autonomous agents make decisions, take actions, and coordinate tasks with minimal human input. These workflows use key components like reasoning, planning, and tool utilization to handle complex tasks.

Compared to traditional automation, like RPA, which follows fixed rules and designs, agentic workflows are more “dynamic and flexible”, adapting to real-time data and unexpected conditions.

In this workflow, the AI agent is answering the user query (Example: “Who won the Euro in 2024?”)

- User query: The user asks a question.

- LLM analysis: The LLM interprets it and determines if external data is needed.

- External tool activation: A search tool retrieves real-time info.

- Response creation: The LLM combines the data and replies:

“Spain won the Euro 2024 against England with a score of 2–1 in the Final in Berlin in July 2024.”

4 types of agentic AI design patterns

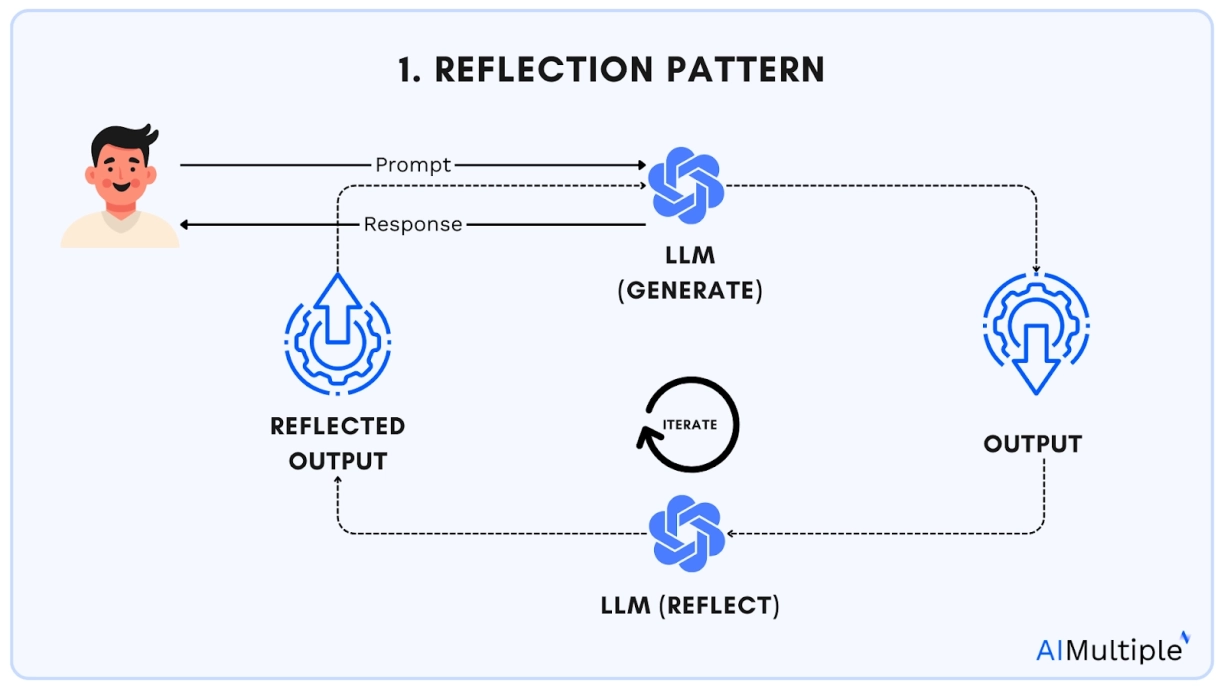

Reflection pattern

The reflection pattern enhances agentic workflows with continuous self-improvement.

→ This pattern involves a self-feedback mechanism where an AI agent evaluates its outputs or decisions before finalizing its response or taking further action.

→ It allows the agent to analyze its own work, identify errors or gaps, and refine its approach, leading to better results over time. This process is not limited to a single iteration, agents can adjust their answers in subsequent interactions.

Real-world example:

AI agents such as GitHub Copilot can refine the code through self-reflection by examining and modifying its own structure and behavior at runtime, for example:

- Initial response: GitHub Copilot generates a code snippet based on a prompt.

- Reflection process: Reviews the generated code for errors, inefficiencies, or improvements. It may use a feedback loop, such as running the code in a sandbox environment, to identify bugs.

- Self-iteration: Evaluates whether the generated code functions as expected, refines its logic, and suggests optimizations.

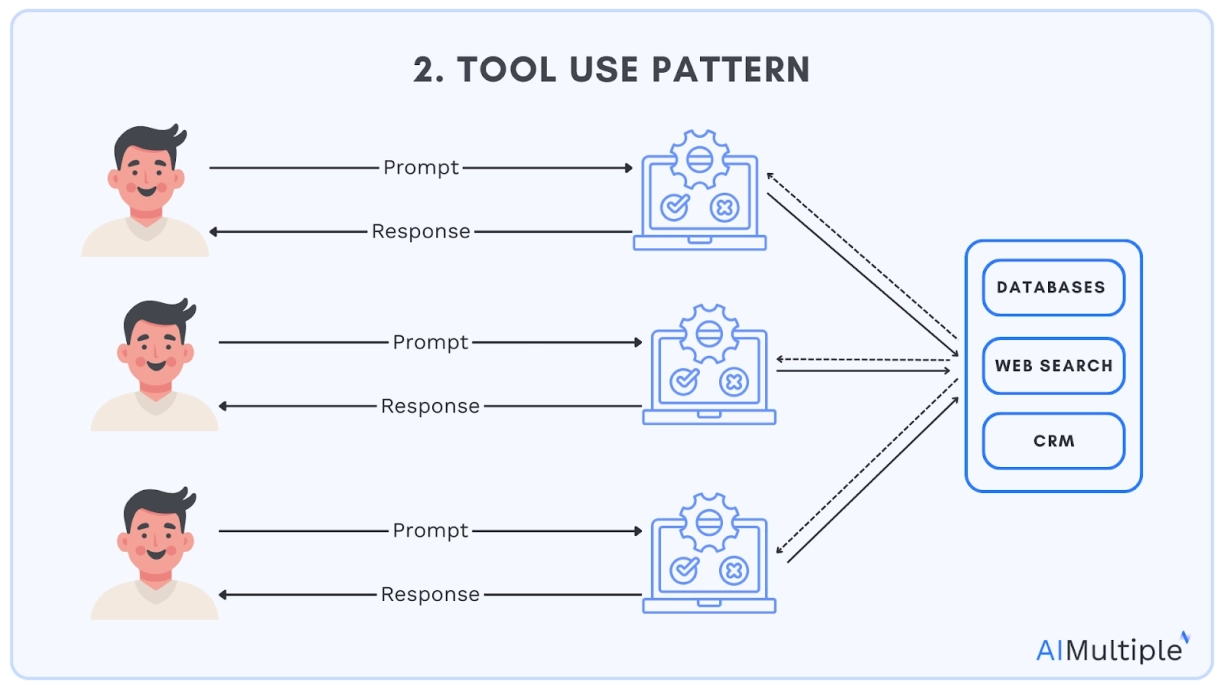

The tool use pattern in agentic AI enhances the capabilities of large language models (LLMs) by enabling them to interact dynamically with external tools and resources.

Protocols like the Model Context Protocol (MCP) help standardize the tool use process.

This allows the AI to move beyond its pre-existing training data and perform real-world applications. With tool use patents and agentic models, can:

- access real-time information (via APIs), search the web

- interact with APIs to process and generate responses

- interact with information retrieval systems

- retrieve specific datasets

- run scripts for data analysis

- leverage machine learning models to run specialized algorithms

We used popular AI agents to test their tool use capabilities:

Real-world example:

Visual-textual synthesis project uses GPT-4 to interact dynamically with both external tools (such as CLIP for image analysis and GPT-4 for reasoning) and external resources (e.g., design tools, e-commerce platforms) to complete complex tasks.

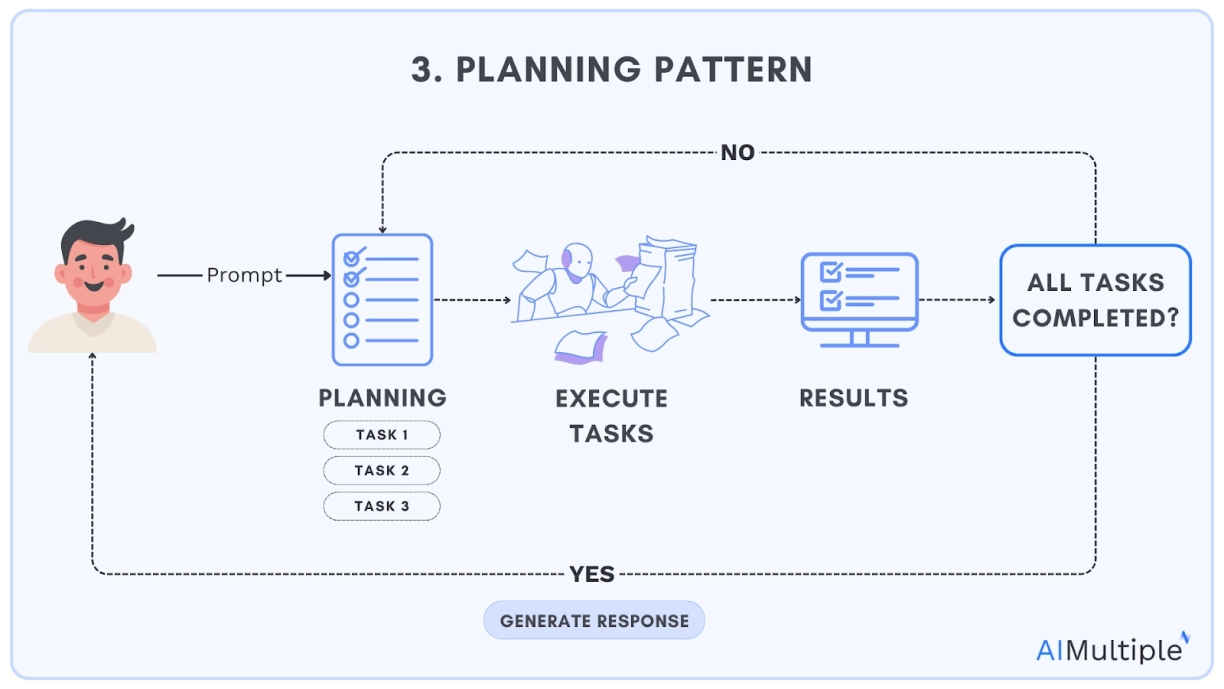

Planning pattern

The planning pattern enables LLMs to break down large tasks into subtasks.

An LLM using the planning pattern will organize the sub-goals into a logical sequence. Depending on the complexity, the agent may plan actions in a linear order or create branches for parallel execution.

Real-world example:

A group of researchers demonstrated how LLM agents collaborate with models from platforms like Hugging Face to handle complex, larger tasks.

The approach was called HuggingGPT, an LLM-powered agent that leverages LLMs (e.g., ChatGPT) to connect various AI models in machine learning communities (e.g., Hugging Face) to solve AI tasks.

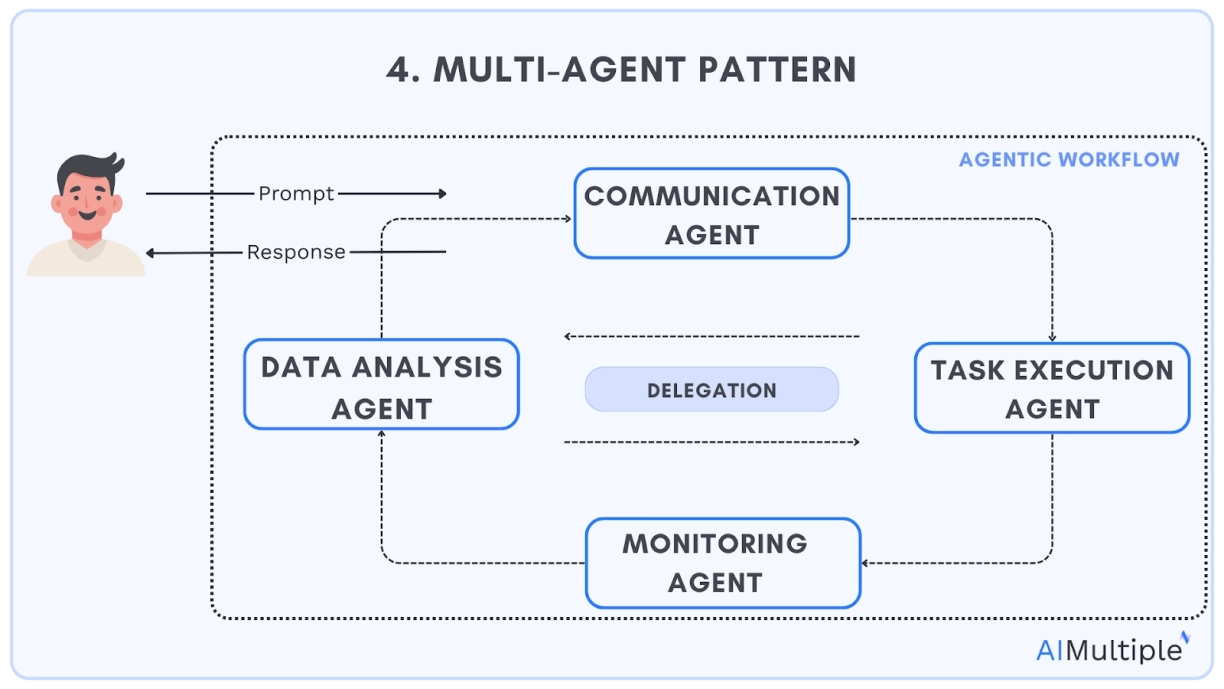

Multi-agent pattern

The multi-agent patterns focus on task delegation, which involves assigning different agents to different tasks that can be created by prompting a single LLM (or multiple LLMs) to handle distinct responsibilities.

For example, to create a software developer agent, you could prompt the LLM: “You are an expert in writing efficient, clear code. Please write the code to accomplish [specific task].“

In multi-agent systems, agents communicate using Agent-to-Agent (A2A) protocols that define the flow of information between them. For instance, Google’s A2A protocol is an open framework that equips agents with structured context and tools.

Real-world examples of multi-agent frameworks:

- AutoGen

- LangChain

- ChatDev

- OpenAI Swarm

For more: Open source agentic AI builders & frameworks

Agentic workflow use cases

1. Retrieval-augmented generation (RAG)

Agentic design patterns can be used in RAG systems to incorporate agents into the RAG pipeline.

For more, see:

→ Discover Top 20+ Agentic RAG Systems

→ Compare Top 20+ Retrieval-Augmented Generation Tools

2. Software development

- Code generation & completion:

- Cursor: Automatically generates code snippets and entire functions based on natural language descriptions

- Devin: Creates apps from scratch with minimal human oversight, using a browser or a command line interface.

- Automated software testing:

- Diffblue: Automatically writes unit tests for Java code, ensuring coverage and code correctness.

- Snyk: Detects and fixes security vulnerabilities in dependencies without human input.

3. Gaming

- Autonomous NPCs:

- AI Dungeon: Uses an LLM to generate fully autonomous text-based NPCs, reacting to player actions and creating narrative events.

- AgentRefine: Enables AI agents and models to identify errors and autonomously correct them, improving their performance for general tasks.

- Autonomous exploration:

- Spore (AI-controlled evolution): AI agents reproduce, mutate, and evolve without human intervention, becoming increasingly intelligent and diverse with each generation.

- Pathfinding:

- NavMesh AI: Autonomous pathfinding system in gaming, where agents can navigate dynamic environments.

4. Multimedia creation

- Turning GenAI search results into Wikipedia pages

- Perplexity Pages: When a user enters a search query, Perplexity Pages aggregates relevant information from multiple sources to turn search results into Wikipedia pages.

- Automated video production

- Pictory autonomously turns text-based content into video.

5. Research & data analysis

- ChemCrow: Running simulations and making autonomous recommendations.

for drug discovery. - AI2: Provides data warehouse management through autonomous systems.

6. Computer use

Computer-use agents (e.g., Anthropic’s Claude Computer Use, or Open Operator) can interact with GUIs, the buttons, menus, and text fields people see on a screen, just as humans do.

These agents can:

- Fill out online forms

- Search the web

- Book travel arrangements

- Automating workflows

7. Customer service

AI agents for customer service respond to customer queries in natural language, interpret context, and generate human-like responses. These agents are commonly used for contact center automation. Some examples include:

- Zendesk AI

- Intercom’s Fim

- Kore.AI Agent

8. Healthcare automation

Agentic AI for healthcare aims to leverage healthcare systems to automate workflows in clinical operations. Tool examples include:

General-purpose healthcare automation:

- Sully.ai

- Hippocratic AI

- Innovacer

- Beam AI Healthcare agent

- Notable Health

Patient support:

![4 Agentic AI Design Patterns & Real-World Examples [2025]](https://blog.solega.co/wp-content/uploads/2025/04/agentic-wokrflows-750x375.png)