AutoBNN combines the interpretability of conventional probabilistic approaches with the scalability and adaptability of neural networks for constructing subtle time sequence prediction fashions utilizing advanced knowledge.

Time series issues are ubiquitous, from forecasting climate and visitors patterns to understanding financial traits. Bayesian approaches begin with an assumption in regards to the knowledge’s patterns (prior likelihood), amassing proof (e.g., new time sequence knowledge), and repeatedly updating that assumption to kind a posterior likelihood distribution. Conventional Bayesian approaches like Gaussian processes (GPs) and Structural Time Series are extensively used for modeling time sequence knowledge, e.g., the generally used Mauna Loa CO2 dataset. Nevertheless, they typically depend on area consultants to painstakingly choose acceptable mannequin elements and could also be computationally costly. Options comparable to neural networks lack interpretability, making it obscure how they generate forecasts, and do not produce dependable confidence intervals.

To that finish, we introduce AutoBNN, a brand new open-source bundle written in JAX. AutoBNN automates the invention of interpretable time sequence forecasting fashions, offers high-quality uncertainty estimates, and scales successfully to be used on massive datasets. We describe how AutoBNN combines the interpretability of conventional probabilistic approaches with the scalability and adaptability of neural networks.

AutoBNN relies on a line of research that over the previous decade has yielded improved predictive accuracy by modeling time sequence utilizing GPs with realized kernel buildings. The kernel operate of a GP encodes assumptions in regards to the operate being modeled, such because the presence of traits, periodicity or noise. With realized GP kernels, the kernel operate is outlined compositionally: it’s both a base kernel (comparable to Linear, Quadratic, Periodic, Matérn or ExponentiatedQuadratic) or a composite that mixes two or extra kernel capabilities utilizing operators comparable to Addition, Multiplication, or ChangePoint. This compositional kernel construction serves two associated functions. First, it’s easy sufficient {that a} person who’s an skilled about their knowledge, however not essentially about GPs, can assemble an inexpensive prior for his or her time sequence. Second, methods like Sequential Monte Carlo can be utilized for discrete searches over small buildings and might output interpretable outcomes.

AutoBNN improves upon these concepts, changing the GP with Bayesian neural networks (BNNs) whereas retaining the compositional kernel construction. A BNN is a neural community with a likelihood distribution over weights reasonably than a hard and fast set of weights. This induces a distribution over outputs, capturing uncertainty within the predictions. BNNs deliver the next benefits over GPs: First, coaching massive GPs is computationally costly, and conventional coaching algorithms scale because the dice of the variety of knowledge factors within the time sequence. In distinction, for a hard and fast width, coaching a BNN will typically be roughly linear within the variety of knowledge factors. Second, BNNs lend themselves higher to GPU and TPU {hardware} acceleration than GP coaching operations. Third, compositional BNNs will be simply mixed with traditional deep BNNs, which have the power to do characteristic discovery. One may think about “hybrid” architectures, by which customers specify a top-level construction of Add(Linear, Periodic, Deep), and the deep BNN is left to study the contributions from probably high-dimensional covariate info.

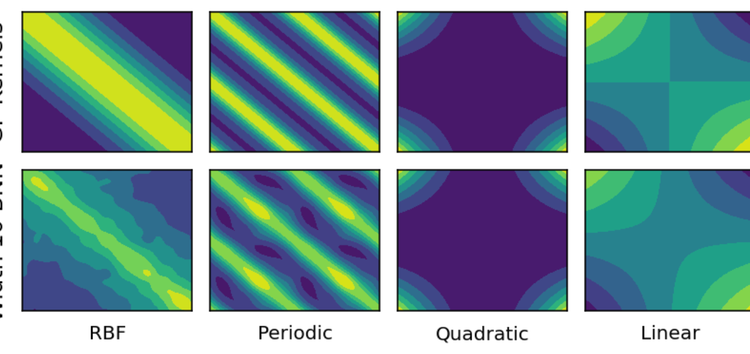

How would possibly one translate a GP with compositional kernels right into a BNN then? A single layer neural community will usually converge to a GP because the variety of neurons (or “width”) goes to infinity. Extra just lately, researchers have discovered a correspondence within the different path — many common GP kernels (comparable to Matern, ExponentiatedQuadratic, Polynomial or Periodic) will be obtained as infinite-width BNNs with appropriately chosen activation capabilities and weight distributions. Moreover, these BNNs stay near the corresponding GP even when the width could be very a lot lower than infinite. For instance, the figures beneath present the distinction within the covariance between pairs of observations, and regression outcomes of the true GPs and their corresponding width-10 neural community variations.

Comparability of Gram matrices between true GP kernels (prime row) and their width 10 neural community approximations (backside row).

Comparability of regression outcomes between true GP kernels (prime row) and their width 10 neural community approximations (backside row).

Lastly, the interpretation is accomplished with BNN analogues of the Addition and Multiplication operators over GPs, and enter warping to provide periodic kernels. BNN addition is straightforwardly given by including the outputs of the element BNNs. BNN multiplication is achieved by multiplying the activations of the hidden layers of the BNNs after which making use of a shared dense layer. We’re subsequently restricted to solely multiplying BNNs with the identical hidden width.

The AutoBNN package is obtainable inside Tensorflow Probability. It’s applied in JAX and makes use of the flax.linen neural community library. It implements the entire base kernels and operators mentioned to date (Linear, Quadratic, Matern, ExponentiatedQuadratic, Periodic, Addition, Multiplication) plus one new kernel and three new operators:

- a

OneLayerkernel, a single hidden layer ReLU BNN, - a

ChangePointoperator that enables easily switching between two kernels, - a

LearnableChangePointoperator which is similar asChangePointbesides place and slope are given prior distributions and will be learnt from the information, and - a

WeightedSumoperator.

WeightedSum combines two or extra BNNs with learnable mixing weights, the place the learnable weights observe a Dirichlet prior. By default, a flat Dirichlet distribution with focus 1.0 is used.

WeightedSums permit a “comfortable” model of construction discovery, i.e., coaching a linear mixture of many doable fashions directly. In distinction to construction discovery with discrete buildings, comparable to in AutoGP, this permits us to make use of commonplace gradient strategies to study buildings, reasonably than utilizing costly discrete optimization. As an alternative of evaluating potential combinatorial buildings in sequence, WeightedSum permits us to guage them in parallel.

To simply allow exploration, AutoBNN defines a number of model structures that comprise both top-level or inside WeightedSums. The names of those fashions can be utilized as the primary parameter in any of the estimator constructors, and embody issues like sum_of_stumps (the WeightedSum over all the bottom kernels) and sum_of_shallow (which provides all doable combos of base kernels with all operators).

Illustration of the sum_of_stumps mannequin. The bars within the prime row present the quantity by which every base kernel contributes, and the underside row exhibits the operate represented by the bottom kernel. The ensuing weighted sum is proven on the suitable.

The determine beneath demonstrates the strategy of construction discovery on the N374 (a time sequence of yearly monetary knowledge ranging from 1949) from the M3 dataset. The six base buildings have been ExponentiatedQuadratic (which is similar because the Radial Foundation Perform kernel, or RBF for brief), Matern, Linear, Quadratic, OneLayer and Periodic kernels. The determine exhibits the MAP estimates of their weights over an ensemble of 32 particles. All the excessive probability particles gave a big weight to the Periodic element, low weights to Linear, Quadratic and OneLayer, and a big weight to both RBF or Matern.

Parallel coordinates plot of the MAP estimates of the bottom kernel weights over 32 particles. The sum_of_stumps mannequin was skilled on the N374 sequence from the M3 dataset (insert in blue). Darker traces correspond to particles with larger likelihoods.

By utilizing WeightedSums because the inputs to different operators, it’s doable to specific wealthy combinatorial buildings, whereas holding fashions compact and the variety of learnable weights small. For example, we embody the sum_of_products mannequin (illustrated within the determine beneath) which first creates a pairwise product of two WeightedSums, after which a sum of the 2 merchandise. By setting a number of the weights to zero, we will create many alternative discrete buildings. The entire variety of doable buildings on this mannequin is 216, since there are 16 base kernels that may be turned on or off. All these buildings are explored implicitly by coaching simply this one mannequin.

Illustration of the “sum_of_products” mannequin. Every of the 4 WeightedSums have the identical construction because the “sum_of_stumps” mannequin.

We now have discovered, nonetheless, that sure combos of kernels (e.g., the product of Periodic and both the Matern or ExponentiatedQuadratic) result in overfitting on many datasets. To stop this, we’ve outlined mannequin lessons like sum_of_safe_shallow that exclude such merchandise when performing construction discovery with WeightedSums.

For coaching, AutoBNN offers AutoBnnMapEstimator and AutoBnnMCMCEstimator to carry out MAP and MCMC inference, respectively. Both estimator will be mixed with any of the six likelihood functions, together with 4 primarily based on regular distributions with completely different noise traits for steady knowledge and two primarily based on the destructive binomial distribution for rely knowledge.

Outcome from working AutoBNN on the Mauna Loa CO2 dataset in our instance colab. The mannequin captures the pattern and seasonal element within the knowledge. Extrapolating into the long run, the imply prediction barely underestimates the precise pattern, whereas the 95% confidence interval steadily will increase.

To suit a mannequin like within the determine above, all it takes is the next 10 traces of code, utilizing the scikit-learn–impressed estimator interface:

import autobnn as ab

mannequin = ab.operators.Add(

bnns=(ab.kernels.PeriodicBNN(width=50),

ab.kernels.LinearBNN(width=50),

ab.kernels.MaternBNN(width=50)))

estimator = ab.estimators.AutoBnnMapEstimator(

mannequin, 'normal_likelihood_logistic_noise', jax.random.PRNGKey(42),

durations=[12])

estimator.match(my_training_data_xs, my_training_data_ys)

low, mid, excessive = estimator.predict_quantiles(my_training_data_xs)

AutoBNN offers a robust and versatile framework for constructing subtle time sequence prediction fashions. By combining the strengths of BNNs and GPs with compositional kernels, AutoBNN opens a world of prospects for understanding and forecasting advanced knowledge. We invite the neighborhood to strive the colab, and leverage this library to innovate and clear up real-world challenges.

AutoBNN was written by Colin Carroll, Thomas Colthurst, Urs Köster and Srinivas Vasudevan. We want to thank Kevin Murphy, Brian Patton and Feras Saad for his or her recommendation and suggestions.