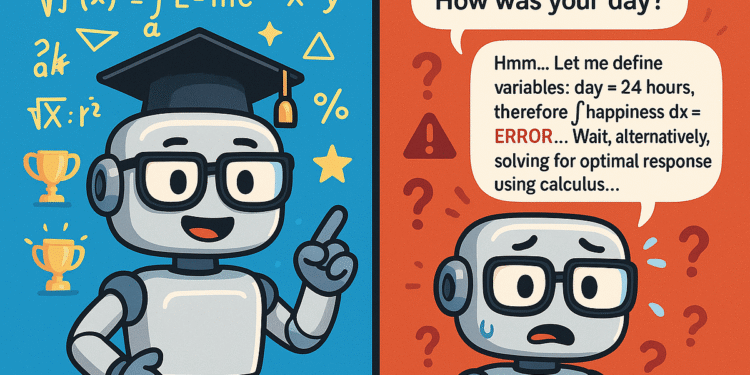

New research reveals why specialized reasoning models excel at calculus but struggle with everyday tasks

We trained AI to be mathematical geniuses, but inadvertently created conversational disasters. — Carnegie Mellon University

AI models are consistently outperforming math benchmarks every week. Some even beat human experts on competitions like MATH and AIME.

But here’s what nobody talks about: these math geniuses often can’t handle basic conversations.

Researchers at Carnegie Mellon University just published evidence that’ll make you rethink how we train AI. Their study examined over 20 reasoning-focused models and found something shocking.

The better a model gets at math, the worse it becomes at everything else.

The research team tested models across three distinct categories:

- Math Reasoning Tasks: MATH-500, AIME24, AIME25, and OlympiadBench.